@ChenZhu-Xie let’s move this discussion into its own topic

@ChenZhu-Xie you can go and update the OWM library, i pushed the modifications to accept following options:

temp = true,

humidity = true,

wind = true,

cache_time = true,

location = true,

custom_emojis = {"🌡️","💧","🍃","ℹ️","🌐"}

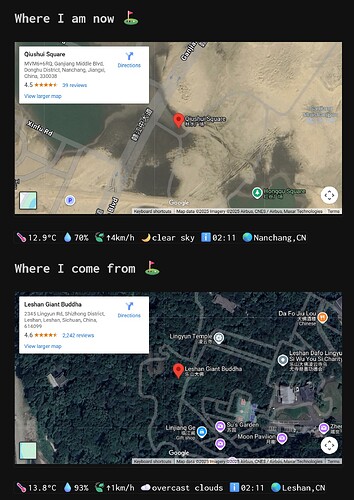

looks good ![]() & good night

& good night ![]()

Is it possible to not expose OpenWeatherMap Token api, while still using this plug?

A common issue arises when one’s website is set to Read Only but Publicly Accessible: even if place it within the Library/ and hide it using #meta, the API remains visible to anyone familiar with SB.

This is not just a problem for this specific plugin, but likely affects others as well, such as GitHub - justyns/silverbullet-ai: Plug for SilverBullet to integrate LLM functionality.

Might need some help from @zef -_-||

This grants visitors the right to use various services, rather than conferring ownership.

i know what you mean. this is a systemwide “issue” which doesn’t has a solution yet, as far as i know. here is another topic on the same topic:

he only workaround i could think about is to make a shell script in your space directory, which when executed, it get’s some environmental variables, and you could intercept this variable as string into your command.

here is how you could set this up. i tried it and lua accepts it.

create a shell script outside your space and name it secret.key with following content:

#!/bin/bash

echo $OWM_API_KEY

now give this script executable permissions: chmod +x secret.key

add your key to the Environmental variables

OWM_API_KEY="YOURSUPERSECRETOWMAPIKEY"

then you can call the shell script directly in your config like this:

config.set( "owm", {

apiKey = shell.run("../secret.key").stdout

refresh = 30,

defLocation = "Nanchang",

...

})

Notice the relative path of the secret.key shell command to your space, ../ means right outside your space folder.

you restart your silverbullet instance/service to validate the Environmental variable.

and here you go, you keep your key outside your space.

i just tried it and it works surprisingly well, and like this, you don’t store your API_KEY in plain text in your space.

![]()

CAUTION

![]()

this works only in a truly read only scenario:

because if silverbullet has write permissions, then someone could use this space-lua:

editor.flashNotification(shell.run("../secret.key").stdout)

and the API_KEY appears as a flash notification.

@zef what do you think about this workaround for secrets/api_keys? Is this exploitable? can someone execute that script in a read only environment?

Ok, it seems sufficient to store the key outside the space — it could be set as an environment variable or simply written as a string in any text file. When combined with setting the space to Read-Only via SB, this should constitute the standard approach to key isolation.

In fact, in this post Can't deploy latest versions on fly.io - #4 by ChenZhu-Xie, I also stored the GitHub token outside the space (i.e., the corresponding persistent volume) through init.sh.

experience to share:

banner with urls, weather widgets and clocks all require the device to have a dedicated (even if dynamic) IPv4 address?

I used to wonder why weather information wouldn’t display on Fly.io while it worked perfectly locally — and now I realize it’s because of the default shared IPv4 configuration?

Sorry but i can’t say anything to this topic. I run my SilverBullet instances either locally (windows) as a background service. or in may HomeLab in a Proxmox LXC container. But what i noticed is when i run the Silverbullet AI Plug on my local instance, with a local LLM (ollama) model, everything works fine. but when i run it inside my proxmox container same with local LLM, only a couple of commands work, and the majority fail. Usually the commands which require streaming through http request would fail, and normal http request work just fine. so maybe it has something to do with how Silverbullet handles proxy http requests.

@justyns this is a little offtopic to this thread but like i mentioned before the proxied https requests not always support to punch through in different silverbullet instances. here is my success/fail experience with Silverbullet AI running with a local LLM model:

![]() - Enhance Note

- Enhance Note

![]() - Connectivity Test - Starting - Timeout - Failed

- Connectivity Test - Starting - Timeout - Failed

![]() - Insert Summary - Works 100%

- Insert Summary - Works 100%

![]() - Generate Frontmatter - Kinda Works - connects to ollama - but then JSON format - Failed

- Generate Frontmatter - Kinda Works - connects to ollama - but then JSON format - Failed

![]() - Suggest Page Name - Works 100%

- Suggest Page Name - Works 100%

![]() - Generate Tags for note

- Generate Tags for note

![]() - Chat on current Page

- Chat on current Page

![]() - Summarize note and open Summary

- Summarize note and open Summary

![]() - Call OpenAI with note as context

- Call OpenAI with note as context

![]() - Stream response with selection or note as prompt

- Stream response with selection or note as prompt

my question is: do you notice a pattern why some commands work, and some aren’t? the plug is correctly configured, so is this a SB issue or a SB AI plug issue?